CARLO ROSELLI

A NEW IMAGE OF REALITY

Author: Carlo Roselli

Title: A new image of reality (provisional)

Contents

A brief history of the origin and development of modern science 3

Introduction: the search for a final theory 10

I The enigmatic world of quanta 16

II The measurement problem in Quantum Mechanics: the DAP experiment 35

III The role of paradox in the scientific context 45

IV Gödel’s influence in mathematics and physics 54

V In search of new concepts of space and matter 75

VI On modern cosmology and the question of why there is something 85

VII The problem of consciousness 118

VIII Into the depth of nothingness 138

IX Elementary physical systems: intrinsic and relational properties 150

X Spherical…Waves 188

XI Self organization of atomic systems 212

XII An EPR-Bohm type experiment based on a radically new description of spin 237

Conclusions 293

Acknowledgements

Bibliography

Index of names

© 2000, 2004, 2009, 2014, 2019 Carlo Roselli

N.B. Throughout the text, very large and very small numbers recur frequently and are expressed exponentially, for example, 1015 (=1.000.000.000.000.000) or 10-15 (= 1/1015 = 0,000.000.000.000.001).

Astronomical distances are expressed in light years (one light year corresponds to a distance approximately equivalent to 1012 Km), or in parsec (pc) and mega-parsec (mpc), respectively equivalent to three light years and three million light years.

Origin and development of modern science

The question as to whether a unifying principle exists behind the various properties of the physical universe encompasses the entire development of western philosophical thinking which originated in Greece during the VII century B.C. Since then the mythological vision of the world, with its irrational depiction of the events, was abandoned in favour of an approach based on reasoning and on the observation of phenomena. Subsequently, the first physical theories emerged, succeeding each other throughout the centuries exclusively based on philosophical language.

The birth of modern science dates back to the work of Galileo Galilei (1564-1642), when the method of reductionism was introduced in physics, where everything could be interpreted in terms of more simplified components and expressed in mathematical language. He realized that only by following this criterion, founded mainly on the observation of phenomena, mathematics and experiment, was it possible to proceed, step by step, towards a complete description of physical reality.

His ideas and observations on the motion and fall of bodies, together with his experiments accompanied by meticulous mathematical descriptions, constituted a fundamental contribution to future developments in modern science, starting with the formulation of a set of physical laws initiated by Galileo and described by Isaac Newton (1643-1727) in his masterpiece Phylosophiae Naturalis Principia Mathematica (1687). We are aware that Galileo was also a philosopher, but in compliance with the criteria adopted for his research he refrained from extending his work to a unitary explanation of the universe in a philosophical key. In fact, philosophical theories, no matter how fascinating, do not receive the same credibility as scientific theories, although one must admit that some of them, as for example Leucippus and Democritus’ atomic hypothesis, have anticipated ideas of contemporary physics. Therefore from Galileo onwards, scientific research will be exclusively based on close interaction between theory and experiment.

Theory is a mathematical model capable of formulating predictions for a given number of phenomena and emerges from the mind’s capacity to recognize, among a great quantity of observed data, a structure which may lead to an abbreviated representation. The more abbreviated the representation, the deeper and more extensive will be the theory. Furthermore, in order to validate a physics theory, modern science adopts the falsifiability criterion proposed by Karl R. Popper (1902-1994) according to which a theory is genuinely scientific only if testable in principle. But no matter how many times it results as undisputed, it will never be declared as invulnerable. It will therefore be considered valid until proven wrong. In short, a theory is accepted as scientific only if it is falsifiable.

Given the choice between two conflicting scientific theories, founded on different hypotheses which attempt at explaining the same phenomena, one will opt for the one which has greater depth and amplitude, i.e. the theory capable of explaining more precisely the same class of phenomena also predicted by the other theory, as well as additional phenomena which clash with the other theory’s predictions. The chosen theory will supplant the other by incorporating it as a borderline case of predictive accuracy. For instance, Newton’s gravitational theory has been subsumed by the General Theory of Relativity formulated in 1915 by Albert Einstein (1879-1955). Newton’s theory established a law on the motion of bodies based on the idea of an attractive force, but it was unable to provide any explanation regarding its origin. His theory, (although incapable of explaining the anomalies of Mercury’s orbit) has been considered as an undisputed truth for over two centuries, until the time when Einstein identified the cause of celestial bodies’ motion in space-time geometrical properties determined by the presence of masses, hence giving a different description of the gravitational force.

After abandoning the old Newtonian concept of three-dimensional homogeneous and absolute space and time, Einstein resorted to the notion of “field” already introduced in physics by Faraday and Maxwell, but enlightened with a new idea: the gravitational field is space conceived as an extension of bodies with an indissoluble connection with time. This is the so-called “four dimensional curved space-time continuum”. What determines the space-time curvature is the distribution of matter and energy: every material body, with its presence and motion in space-time, varies the configuration of its own generated field and consequently the variation which propagates externally from the body at the speed of light, thus influencing the motion of other bodies. The non-Newtonian effects of the space curvature are measurable in proximity of great masses like the sun and even more so in the vicinity of extraordinarily strong gravitational fields, like those generated by neutron stars or black holes, which are real space-time traps from which almost nothing seems capable of escaping.

The ultimate objective of contemporary science is to find a unifying and rational description of the laws which govern the world. However, the way towards achieving this goal has been paved with many obstacles which emerged in the wake of the great scientific discoveries during the first decades of the XX century and which raised many doubts regarding even the concept of science as a theory. The main obstacle arises in every attempt to unify the two actual dominating physical theories: general relativity which describes the macroscopic aspects of our universe and quantum theory which describes the microscopic structure of matter.

Both theories have incomprehensible features. On one hand, general relativity implies the paradoxical notion of singularity predicted in a black hole, which could develop in particular circumstances where a quantity of mass greater than a critical value is involved, beyond which the theory predicts infinite values for the density of matter and the curvature of space: the mass would irresistibly contract and reduce itself to a zero-dimensional point, hence eliminating the indissoluble connection between space and time, which is fundamental for the theory. On the other hand, quantum theory is characterized by peculiarities that confound common logic and which cannot be reduced to an intuitively comprehensible description using classical language.

The origin of quantum theory dates back to December 1900 when Max Planck (1858-1947) found a way to formally explain the law on the spectrum distribution of a black body (a black cavity with perfectly absorbing walls in which energy can be pumped), thus solving the so-called problem of “infinite emission of radiation” (Rayleigh-Jeans law), more precisely called “ultraviolet catastrophe”. Up until that time physicists assumed that electromagnetic waves could oscillate with arbitrarily small wave lengths and therefore thermodynamic energy would have theoretically grown endlessly within its cavity, whereas experiments kept giving finite values.

Planck realized that in the presence of a theoretical result which made no sense, it was necessary to come up with a new idea. He assumed that energy could exist in packets called “quanta”. Thus, he put forward the existence of a discrete element, h, called “minimum quantum of action” or “fundamental constant of nature”, the quantity of which is nh=E/v (where E is the energy of the electromagnetic wave, v is its frequency and n is a positive integer). Since the frequency is expressed as the inverse of time (v=1/T and, therefore, nh=ET), the constant h has the dimension of an energy multiplied by a time and its value is 6,63·10–34 J∙s (Joule per second is the measurement in S.I. units). In the above equivalence one of the two quantities (E or T) can be as small as you like, but never null (since in this case there would not be any action), and both the quantities, being linked to the constant h, will be balanced.

A further significant advance in quantum physics occurred in 1905 with the discovery by Einstein of the photoelectric effect, that represents an interaction between light and matter and that cannot be explained by classical physics, where light is described as an electromagnetic wave. In fact, the maximum kinetic energy of the released electrons, for example, in a metal sheet doesn’t vary with the intensity of light, as expected according to the classical wave theory, but is proportional to the frequency of the light.

Another relevant advance occurred in 1913, when Niels Bohr (1885-1962) introduced the concept of “quantum state”. This innovative concept stems from Planck’s idea of “elementary quantum of action” (as well as from Einstein’s contributions on the corpuscular nature of light), and became a very useful revelation. First of all it enabled Bohr to find a solution to the atom model conceived at that time by the physicist Ernest Rutherford (1871-1937) based on Newtonian concepts of mechanics which were unable to explain the stability of electrons around the atomic nucleus (in fact, the classical concept predicted that the electrons of an atom, radiating energy, would ultimately precipitate into the nucleus ). Bohr proposed a coherent description of the motion of electrons in terms of quantized orbits, starting from one orbit as near as possible to the atom’s nucleus called “fundamental state of the electron”. When sufficiently stimulated by light, the electron jumps to a superior orbit without occupying any of the orbits in between. Later it also became clear that the concept of quantum state needed to be associated to the dual nature of the electrons (and of any other microscopic particles such as photons, protons, atoms and molecules) which sometimes behave as waves and sometimes as material particles, depending on the way experiments are conducted by an observer who intends to study their properties.

Finally, in 1926 all the above concepts lead quantum physics to the completion of a theoretical formulation known as Quantum Mechanics, thanks to Bohr’s work based on the complementarity principle, to Schrödinger’s differential equation that describes the linear, deterministic and reversible time evolution of the state-vector of any physical system, thanks to Max Born (1882-1970), whose contribution essentially concerns the postulate of wave-packet reduction (the indeterministic and irreversible process occurring when a measurement is effectuated on the system) and represents the interpretative aspects of the probabilistic assertions of the theory, and thanks to the work of Werner K. Heisenberg (1901 – 1976) based on the uncertainty principle, which establishes the impossibility of measuring with arbitrary precision pairs of conjugate variables related to a quantum particle, for example its position x and its momentum p; it also establishes an absolute limit to the product of the two precisions, Δx and Δp, fixed in Heisenberg’s well known inequality Δx Δp ≥ħ (where ħ represents Planck’s constant h divided by 2π).

This limit derives from the fact that measurable quantities are subject to unpredictable fluctuations. If, for example, one wishes to measure the position of an electron in an atom with great precision, one needs to hit it with photons having a small wave length, i.e. endowed with a sufficiently large momentum, in such a way that the ensuing recoil will significantly and randomly alter the momentum of the electron. Furthermore, if one measures the momentum of an electron, its position will be altered and, therefore, one cannot know the future states of any atomic system, but can only predict the probabilities of its possible different states.

Any hypothesis of a complete unification of the laws of physics known to science will require changes to one of the two dominant theories, if not both, since the conflict between the principles on which they are respectively founded seem to be irremediable. Although they may appear functional, the suspicion that at least one of these theories may not be fundamentally correct could represent a good reason for further in-depth investigation. It would be necessary to develop alternative theories in order to find hidden connections or different principles capable of explaining the same phenomena.

As guidelines for such research one could start by assuming the validity of quantum theory and be able to introduce innovative ideas on the structure of space-time, explore specific areas of mathematics such as topology and the modern theory of knots. Alternatively, one could start with the theory of General Relativity by investigating the way in which it could modify quantum theory in order to incorporate it. Furthermore, one cannot exclude that both theories should, in one way or another, be modified. But, when abandoning the credibility of the two major physics theories, how could we convince ourselves of being in possession of a complete and coherent theory?

In order to answer these questions one will have to take into account two radical limitations that make the expectancy of a unitary explanation of the physical world apparently impossible: the principle of uncertainty, which has already been quoted, at the basis of quantum theory, and Gödel’s Theorem of Incompleteness (Kurt Gödel, 1906-1978) concerning the logical-mathematical system. This theorem, published in 1931, establishes the impossibility of demonstrating, with a finite number of steps, the coherence and, at the same time, the completeness of a set of formalized axioms large enough to include at least the natural numbers. In other words, it demonstrates that, in theoretical calculus, a set of axioms for demonstrating all possible statements does not exist, i.e. pure mathematics is unlimited. Therefore, according to the philosophical position of a few physicists, such as Freeman Dyson, any attempt to formulate a Theory of Everything is bound to fail, since the restrictions imposed by Gödel’s Theorem would affect all physical theories which aim to be recognized complete as well as self-consistent. Indeed, for being empirically controlled, they should include at least a description of arithmetical nature.

Clearly, the uncertainty principle, as well as Gödel’s Theorem of Incompleteness, represent two fundamental conquests of thinking. Nevertheless, through careful considerations, they can provide us with the legitimate suspicion that some of the concepts and the logical principles, used up until now to study and amplify our knowledge of physics and mathematics, could be founded on erroneous beliefs and, therefore, inadequate for solving the fundamental issues in their respective spheres.

Quantum Mechanics, although showing an extraordinary accordance with experimental results, leads our knowledge of the world into an obscure zone dominated by weird notions such as intrinsic indeterminacy, superposition of states and the consequential probabilistic assertions, and it is unclear whether the latter derive from our own temporary ignorance or from a natural intelligibility limit.

Amongst the plausible interpretations of the theory, the probabilistic one predominates. This interpretation is based on the idea of a fundamental uncertainty concerning the physical processes and of an intrinsic incompleteness of our conceptual apparatus. Bohr’s work is at the basis of the philosophical and scientific paradigm of the Schools of Copenhagen and Göttingen and represents an extreme form of operationalism within the context of neo-empiricism, i.e. the project of renovation of the philosophical conception of science originated at the beginning of the 20th century with the aim of re-examining the nature and significance of scientific concepts after the abandonment of the old mechanistic vision of the world in favour of a new relativistic vision of the quantum world.

Bohr’s work, also known as the “orthodox interpretation of quantum mechanics”, maintains that the theory is “complete” and that its principles do not legitimate us to ascribe well-defined properties to atomic systems until they have been measured. This implies the denial of the “principle of reality” of nature, in other words, the real existence of phenomena independently of measurement procedures. In fact Bohr was convinced that there was no need for additional assumptions, believing that an objective conception of reality is not essential to physics.

Several scientists, such as Sommerfeld, Born, Pauli, Jordan and Dirac agreed with Bohr’s interpretation, while others, sustainers of realism and causalism, such as Planck, Einstein, Schrödinger, de Broglie and Bohm, strongly opposed this idea. The animated controversies between these two different groups of physicists, whom we will simply call ”anti-realists” and “realists”, respectively, had the positive effect of promoting research. The realists, however, never succeeded in finding sufficiently strong arguments capable of demolishing the probabilistic conception of the new theory and consequently, the assumption of its completeness, despite their many efforts. Among these efforts, the most significant was a conceptual experiment elaborated in 1935 by Einstein, together with the physicists Boris Podolsky (1896-1966) and Nathan Rosen (1909-1995) and known as EPR (standing for their initials). This experiment aimed at demonstrating that quantum systems possess well-defined properties such as, for example, position and momentum, independently of measurements.

The memorable disputes characterized by the contrasting philosophical and epistemological positions of Einstein and Bohr went on for years, until the realism as conceived by Einstein was declared defeated and, unexpectedly in 1986, a new phenomenon called “non-local-effect” came to thicken the quantum world’s mysteries: two or more particles which have interacted with each other seem to constitute an inseparable unit even though they are light years apart. In fact, when subjected to measurement with appropriate experimental apparatus, these particles give the impression of being telepathic or of exchanging information at superluminal speed.

During the long period of debate between realists and anti-realists, scientific research was proceeding at an increasingly fast pace and, thanks to technological development, it was possible to initiate a programme for studying the structure of matter. The first great turning point took place in 1928, with the hypothesis of the existence of anti-matter foreseen by Paul Dirac (1902-1984), subsequently proved empirically in 1932 by Carl D. Anderson (1905-1991) and soon after confirmed by Giuseppe Occhialini (1907- 1993) and Patrick Blackett (1897-1974).

After the discovery of the positron (antiparticle of the electron), the rush began for the construction of evermore powerful accelerators of particles, since the only known expedient to study them is by accelerating two beams of particles at very high kinetic energy, by letting them collide against each other and by recording the resulting effects from which information concerning their composition and the forces acting inside can be extrapolated (the products emerging from the collisions are constituted by new particles which are more massive than the original ones, the exceeding mass being due to kinetic energy used in the collision process).

Today, in order to expand knowledge on the structure of these products, researchers use high energy physics and the modern theories of Quantum Fields Physics. These theories incorporate the principles of Special Relativity formulated by Einstein in 1905 (which foresees the unification of the concepts of space and time as well as the transformation of energy into matter and vice versa with its well-known equation E=mc2, where m stands for mass and c for the speed of light) and introduce the new dual concept of “field-particle”: the field is an entity which manifests itself as a flux of particles while each particle is a quantum associated to a field. A new idea was then going to be consolidated: anything which oscillates with a given frequency can only manifest itself in discrete unities of mass m=nhv/c2. Such a formula, which follows from the two equivalences E=nhv and E=mc2, affirms that mass is equivalent to the Planck’s constant multiplied by the frequency and divided by the squared value of light speed).

The experimental observations aimed at investigating in depth the structure and behaviour of the subatomic universe have finally lead physicists to think that this is exclusively constituted by particles and immaterial fields, and many of them believe to be very close to the possibility of identifying the ultimate constituents of physical reality. However, they do not intend to restrict themselves to creating a list, but would also like to understand the nature of such fundamental entities and of the precise forces acting upon them, as well as understanding the mathematical connections between these entities and forces. Furthermore, they hope to build very soon a theory in which quantum fields and gravitational field may be unified in a super model characterized by extraordinary simplicity and beauty.

The quantum fields theories (QFTs) are included in the Standard Model (SM) and they are: Quantum Electro-Dynamics (QED), Quantum Chromo-Dynamics (QCD) and Electro-Weak Theory (EWT). The SM has been developed in the early 1970’s thanks to the contributions of the physicists Murray Gell-Mann and George Zweig, who in 1964 proposed, independently from each other, the existence of sub-nuclear particles which they called “quarks” and which had been experimentally discovered in 1969.

The Standard Model is still in development and studies the three forces interacting at very short distances within the atom: Electromagnetic force, which keeps together electrons and nucleons in the atoms, which, in their turn, can connect with each other to form molecules and molecular chains: 2) Strong nuclear force which assures an extraordinary stability of the nuclear structure (since all protons of a nucleus have a positive charge, the nuclear force must be extraordinarily strong in order to keep them together despite the enormous repulsive electric forces operating amongst them at extremely short distances, approximately 10-13 cm); 3) weak nuclear force responsible for the neutron’s decay (more precisely, for its transformation into a proton with the release of one electron and one anti-neutrino) and for the explosion of stars with great mass, such as supernovae and hyper-novae. Electromagnetic and Weak forces Have been unified in EWT in 1979 by Sheldon Glashow, Abdus Salam, Steven Weinberg and their foreseen particles W and Z have been discovered in 1983 by a team of researchers led by the Nobel prizes Carlo Rubbia and Simon van der Meer at the Super Proton Synchrotron at CERN.

As far as we know, there are four fundamental forces operating in our world: 1) Electromagnetic, Weak, Strong and Gravitational. Each of these forces involves the exchange of one or more particles called “virtual”. Photon is the exchange particle for Electromagnetism, W+, W– and Z0 for Weak interactions, gluon and quarks for Strong interactions and graviton for Gravity. The above interactions, explained as exchange of virtual particles, except the graviton, are well-established descriptions of laws of nature.

This type of explication has a relevant significance: a theory capable of describing all fundamental particles will also be able to describe all forces. Supposing that these particles and forces, or this hypothetical “unit” is not a “bottomless pit” according to a scale of infinite levels of components which grow smaller, it seems reasonable to imagine the existence of an ultimate level of physical reality. But at this point the crucial problem is how to conceive a fragment of reality with the comprehensible characteristics of indivisibility.

The researchers who study the composition of matter consider some of its components to be indivisible for a rather simple reason: as a result of some experiments conducted on the energies available today, some particles, such as electrons, appear without a structure, in other words deprived of a hard nucleus against which another particle that is fired at high speed would bounce off. In this sense the indivisibility of a particle in physics is a purely conventional concept. Therefore one cannot exclude that at higher levels of energy, particles which are today considered indivisible will not be considered as such any longer (at present, electrons and quarks, as well as gluons and particles W+, W–, Z°, have been measured with a radius r < 10-18cm).

Clearly, if we hope to build a unified and comprehensible theory of the physical world, it will be necessary to gain knowledge of the ultimate constituents of matter and, should they exist, understand their genuine properties. However, such knowledge will never come from theories based exclusively on measurement of physical quantities, since during experiments there will always be a margin of uncertainty corresponding at least to Planck’s constant. Planck himself asserted that “it is the existence of the indivisible quantum of action that fixes a limit beyond which none of the most accurate measurement methods could help us to solve the questions about the real processes occurring in the micro-world”.

On one hand, in the Standard Model of Quantum physics, the hypothetical elementary particles are considered dimensionless, thereby referring to concepts of Euclidean geometry (which can be considered as a theory on the properties of physical space rather than a branch of mathematics). On the basis of this assumption, the introduction of calculus for measuring physical quantities such as, for example, the electric field of the electron, which varies in inverse proportion to the square of distance, implies a proliferation of infinite quantities. This constitutes an anomaly that physicists have found a way to overcome using a questionable strategy called “renormalization”, which consists in redefining the metric scale by assuming that the zero-dimensional source to be measured has the value obtained empirically at an arbitrarily small distance, but greater than zero. This procedure is undoubtedly a useful expedient for physicists in order to avoid meaningless results. However, this procedure has limits: it is no longer applicable when the gravitational field is taken into account, and for this reason it could be interpreted as a sign that there is something wrong with the fundamental assumptions of the theory.

On the other hand, if we imagined particles as very small spheres (which are objects pertaining to a familiar vision of space), we would again encounter a serious problem: a small sphere implies the idea of three-dimensional space, and in this specific hypothesis it would need the property of absolute rigidity. However, in the context of relativistic quantum physics, such a property would imply that, if the sphere were hit by another particle in a precise region of its surface, all the other regions would have to start moving simultaneously, thus violating the principle of the theory of relativity which does not allow transmission of information at superluminal speed. Furthermore, from a realistic point of view, neither the notion of poin-like particles, nor that of tiny, rigid spherical particles, allow us to comprehend how they obtain their properties such as, for example, the spin one-half of the electron. A more original idea would be required in order to explain elementariness in non problematic terms.

Once, with the aid of philosophy, mathematics and rather primitive instruments of observation, a simple image of a microscopic fragment of reality seemed unconceivable, being pursued with the naïve idea of an impenetrable and eternal foundation. Nowadays, despite experiments conducted in the light of new quantum theories (based on ontology of fields) and with the aid of very advanced technologies, the scenario presents dynamic configurations which are, nonetheless, as well unconceivable.

The idea of continuum + material of the classical vision, based on the belief of a rigid determinism, has collided with the idea of discontinuum + immaterial of the relativistic vision of quantum fields based on a fundamental indeterminacy.

In the light of the current research the future progress of science can be set within a project characterized by two important objectives: the first is the unification of quantum fields, the second is a theory of complete unification of quantum fields together with gravitational field, in other words a unified description of all entities which play a role in the physical reality and all laws which govern their behaviour.

INTRODUCTION

The search for a final theory

There are many physicists who, adhering to all or part of the restrictions implied in the orthodox interpretation of quantum mechanics and to the idea of a fundamental limitation of human conceptual apparatus, object that a unified physics theory can’t be achieved, and most of them are convinced that it will be impossible to shed light on certain mysterious aspects of the physical world, particularly of the quantum world.

Another objection to the possibility of formulating a physics theory which describes all the fundamental aspects of the world was raised by Popper (the founder of Critical Rationalism) in his evolutionistic thesis, in which he compares scientific progress to an ongoing march towards an ideal of truth which cannot, nevertheless, be ultimately reached.

However, despite apparently insurmountable obstacles, a large group of scientists such as Theodor Kaluza, Oscar Klein, Abdus Salam, Steven Weinberg, John Wheeler, Alan Guth, Roger Penrose, Michael Green and John Schwarz, to mention a few, have offered interesting contributions to the search for a unified and simple theory capable of encompassing all the laws of nature, and some of them have moved further by creating and divulging cosmological models containing answers to questions which exclusively relate to philosophers, theologians and mystics. Their proposals, full of provocative conjectures, have aroused vivid scientific interest. Nonetheless, they all contain various elements introduced without any explanation.

At this stage it will be useful to clarify what we mean by “complete physics theory” or “Theory of Everything”, since the philosophical positions of the scientists involved in the search of such a theory are more or less in contrast with one another, according to their different ways of indicating the essential features of the final theory. If we exclude the extremist position of the anti-realists, who interpret the uncertainty principle and the consequent probabilities as fundamental and irreducible characteristics of nature, and as such consider quantum mechanics to be a definitive theory (meaning that it is capable of describing all there is to know about the physical world), then we will have to take into account the realistic positions, some of which agree with Popper’s ideas, while a few others are in total disagreement with his evolutionistic view of science. Here is a brief outline of some of their different ways of conceiving a Theory of Everything.

Some physicists, such as David Deutsch, believe that the formulation of a theory of unification of the four fundamental forces of nature is realistic, but exclude from it a vast range of phenomena such as life, thought, consciousness, creativity and, of course, the possibility of answering the profound questions concerning the existence of the universe with all that it contains.

The work of the mathematician and physicist Roger Penrose, who has dedicated many years of his life to a rigorous interdisciplinary research, appears more ambitious, aiming at two interconnected objectives: the formulation of a quantum theory of gravity and a rational description of the relationship between physics, mathematics and mind, this latter implying the problematic phenomenon of consciousness. Penrose is convinced that the complex physical activity of the brain does not function exclusively on a computational basis, but has to include some kind of activity which goes beyond computation and which is capable of provoking states of awareness. For this reason he maintains, against the supporters of strong AI (Artificial Intelligence), that even the most elaborate computer could never possess the same properties as a human brain.

The English physicist John D. Barrow, in his book “Theories of Everything”, concludes by stating that “There is no formula that can deliver all truth, all harmony, all simplicity. No Theory of Everything can ever provide total insight. For to see through everything would leave us seeing nothing at all”.

Amongst physicists who claim to be annoyed by the idea of a theory capable of explaining everything, the Italian scientist Tullio Regge emphasizes the condition of mystery which accompanies human existence with the following statement: “should we unveil the final theory which explains everything, I would be deluded with the Universe and I would consider it the work of a novice.”

However, there are some scientists who do not exclude the possibility of discovering, in some distant future, a complete and self-consistent description of all human knowledge. In this case, as the physicist Stephen Hawking used to say (before changing his conviction), we would be permitted to understand the “mind of God”. Supposing that these scientists were right, how could we prove that such a theory corresponds to reality? It seems impossible to answer this question because, in order to know what reality is, it is necessary to have a theory that could be falsified in the future. Every time fundamental hypotheses on the world are formulated, it is not possible to derive consequences which are more reliable than those hypotheses.

Personally, like many others, I have always been fascinated and, initially, disorientated by the fact that cosmic reality exists and life with it. But now, after years of studying various branches of science, I do not exclude the idea that all fundamental aspects of our universe, although generating an impression of unfathomable mystery, could find in the future a rational explanation. I therefore cannot see a good enough reason for decreeing our permanent coexistence with this mystery and, differing from certain physicists’ convictions, I think its revelation, if possible, could be anything but deluding!

While I suppose it is realistic to search for a Theory of Everything, I’m aware that there are, nonetheless, big problems to solve. First of all, we know that the quantum fields theory, because of the uncertainty principle, describes only a part of cosmic reality, where the observer seems involved in some inextricable way with the system which is the object of his investigation and from which he cannot extrapolate, empirically, all the information necessary to describe it. For this reason the search for a complete description of reality will require, first of all, a revision of the problem concerning the distinction-confusion between what is called “observer” and what is called “observed”.

Other steps could also be significantly helpful. Amongst them, a very promising contribution could consist in elaborating an experiment capable of proving that quantum theory is incomplete and/or wrong. In this case, the forces of the scientific community would be entirely mobilized as opposed to small groups of isolated researchers.

Anyway, I think that a revolutionary idea is already under our nose and that, if we can catch hold of it, it would allow us to overturn the present scientific paradigm. Surely, the difficulty of problems that need to be tackled will be extreme. Indeed, my personal philosophical position is itself extreme, since, in order to aspire to the formulation of a rational and self-consistent unified theory of nature, I think it is also necessary to find satisfactory answers to the following questions:

To answer the first question, science generally refers to a non-eternal universe that was born in relatively recent times (13,8 billion years ago) according to the standard Big-Bang Theory, and that is not very much older than the stars or the beginning of life on Earth (more or less 4 billion years ago). But if the Universe is not eternal, how did it originate and where do the laws that control its evolutionary process come from? If we intend to remain anchored to the spirit of science, in other words, to avoid postulating the existence of transcendental realities of a religious or platonic nature, we will have to find satisfactory answers to the above two questions.

Many (anti-platonic) scientists believe that the laws of nature (or the primary law from which they derive), as well as space and time, must have generated themselves through physical processes during the evolutionary course of the primeval infancy of the universe, since they could not exist before its birth. Therefore, to coherently explain the sudden birth of the universe, those scientists will have to take into consideration, whether they like it or not, the notion of “nothingness”, define this category of thought without stumbling into contradictions and explain why physical reality and the laws that govern it are logical “implications of nothingness”.

To embark upon what seems a senseless mission, although I personally would define it as the deepest of all conceivable quests of the human mind, we will have to discard many of the old concepts, formulate new questions, search amongst the heaps of scientific data at our disposal and, above all, thoroughly meditate on the meanings hidden within the great intellectual conquests of the past century (particularly on Planck’s elementary quantum, Heisenberg’s uncertainty principle and Gödel’s Theorem of Incompleteness).

The considerations concerning the fundamental issues in physics, logic and mathematics which I attempt to address in my book, not without difficulty I might add, have enabled me to conceive a wide variety of three-dimensional images, inclusive of some computer-aided animations, and to describe a few conceptual and theoretical experiments. All this work is the product of a passionate and wide-ranging research which began when I was very young and which has been a constant companion throughout my life.

I will begin this book by describing some of the fundamental steps that paved the way to the formulation of quantum mechanics (from now on also abbreviated QM) and specific related experiments. First of all, I would like to point out that a large part of the first, fourth and fifth chapters of the book, including the prologue, are addressed to those who may not be too familiar with consolidated physical theories or with the philosophical bases of mathematics as well as the unsolved issues in advanced scientific research.

In the second chapter I will tackle the complex matter of “measurement” in QM, this being the source of well-known “paradoxes” which create serious difficulties in our conception of reality should we agree with Bohr’s assumption of the completeness of the theory. In this context I will describe a conceptual experiment named “The DAP (Dead-Alive Physicist) experiment”, which aims at confuting a well-known subjective interpretation of Eugene Wigner’s theory [(somehow related to the Copenhagen (Bohr) interpretation of QM)], at the time proposed with the objective of preserving the completeness of the theory. The DAP experiment may be thought of as a variation on the original example of Schrödinger’s Cat in which there is no separation between the experimental apparatus and the observer. The particular method used of including the observer in the experimental setup corresponds to the idea that there should be an interlacement between quantum-mechanical processes and those of conscious perception. The DAP experiment has been devised in such a way as to undermine the idealistic interpretation of QM. In synthesis, it confutes the assumption that the consciousness of the observer himself is able, in some unspecified manner, to collapse the system down into one only of its possible states. In simpler words, the idea enclosed in the maxim “the moon doesn’t exist when nobody is looking at it” is no longer tenable.

In the third chapter I will focus my attention on the role of paradox in science and on the different philosophical positions concerning the destiny of our possible knowledge, with particular reference to antirealism and to the critical rationalism proposed by Popper. In addressing the question of paradox, with its metamorphic peculiarity, I will put forward the idea that it originated as a conceptual error that occurred unavoidably during the evolution of human experiences and that it can now be recognized and removed.

The fourth chapter concerns the relationship between the mathematical and the physical worlds. Starting from the discovery of non-Euclidean geometries in the beginning of the XIX century, I will summarize the essential steps which led Gödel to enunciate the well-known theorems that changed the image of the logical-mathematical construction. I will also make some personal observations on the philosophical implications of his results, which are still an object of discussion today. Then, sharing the philosophical ideas of Paul Feyerabend in his book “Against method: Outline of an anarchistic theory of Knowledge”, I will come to a conclusion which may sound heretical: the traditional criteria rigorously established at the base of scientific theories have come to their natural end, meaning that they are no longer functional in attempts at formulating a theory of complete unification of quantum fields and gravity field and, therefore, they should be abandoned in favour of revitalized human resources. I’m mainly thinking not only of a reconciliation of science with philosophy, but also of a closer and closer connection between science and art.

In the fifth chapter I will refer to how various scientists have opened the way towards new concepts of space and matter and outline a brief history of Strings and Superstrings theories, Penrose’s Twistor-Space and Carlo Rovelli’s QLG (Quantum Loop Gravity).

The sixth chapter concerns a question of metaphysics which fundamentalist philosophers and physicists consider the deepest of questions: “why is there something rather than nothing?” I believe this is a badly phrased and misleading question which will lead me to address the following notions: nothingness, entities, causality, void, energy, while conscious experience, together with the related problems of interaction between mind and body, will be discussed apart.

With regard to consciousness, which represents the crossroads of all questions, in view of the many different philosophical approaches which aim to achieve a solution, in chapter seven I’ll propose a modernization of panpsychism and, more precisely, its conversion into a physicalistic vision. My personal idea is that all quantum systems possess a self-experience quality which is associated to a physical and fundamental dynamic property and which could be imagined as a periodical self-referential action. To give you a plausible suggestion of such an action I’ve elaborated a particular animated-computer video, in which you may be reminded of the paradoxical symbolic figure of the Ouroboros (belonging to all ancient cultures), a sort of snake or dragon in the act of eating and regenerating cyclically its own body. Actually, in this video available in my web site (www.carloroselli.com) I depict a topological object which suggests the idea of a cyclical self-penetration, that I suppose equivalent to the physical process of self-proto-experience. Such a process, owing to its intrinsic and relational dynamic properties, could represent a universal mathematical model for understanding how life, including feedback control, may work at all of its levels.

In this perspective, conscious experience is not supposed to be an emerging phenomenon occurring at a certain level of biological complexity, but rather a property of the physical world that, starting from its fundamental quantum level constituted of single periodical loops, in adequate circumstances can evolve and increase in intensity along a scale of a finite number of specific levels of loops organization. This means that the self-experience of a thermostat, for example, is no more intense than that of its single constituents, while the self-experience of chromosomal DNA is more intense than that given by the sum of all its components, since its structure belongs to a higher level of organization. More precisely, I mean to say that chromosomal DNA is not just a simple loop or a set of single loops, but a set of elementary loops (without free ends) enclosed in a super-loop that may be called “loop of second level”. An example of super-loop of second level could be given by prokaryotic simple single-celled organisms (bacteria), which have neither nuclei nor a complex cell structure, while an example of loop of third level could be represented by pluricellular eukaryotic organisms, in which the DNA is located inside the nucleus. Both loops of second and third levels behave as a whole and, with respect to a fundamental loop, possess new intrinsic properties or functions, such as replication (occurring, in the case of eukaryotic organisms, in several points at the same time in each chromosome), as well as relational properties, such as complex interactions with its environment (in particular, photosynthesis).

In my particular conception of the world, the six notions that I have discussed in the above two chapters (nothingness, entities, causality, void, energy and conscious experience) are connected with one another and will lead me, in the eighth chapter, to investigate in more depth the notion of “nothingness” and to provide a definition of what might be reasonably conceived as the Principle of Sufficient Reason, i.e. the principle that guarantees a logically inevitable and self-sufficient domain to the Totality of existing entities.

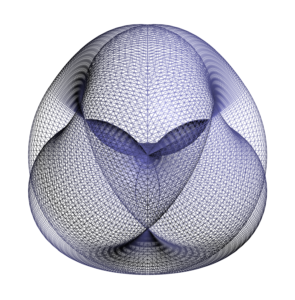

Chapters IX and X concern the question of how to conceive atomic particles (in the strict sense of the Greek word) through geometrical and analytical procedures. Initially, I will propose a skew curve characterized by particular simplicity due to its degree of symmetry. Reflecting on the geometry of the sphere, from that curve I could derive the existence of two infinite sets of figures called “Spherical Waves” or “Spherical loops”, one set countable and the other uncountable. Furthermore, inside the infinite uncountable set I have identified a finite subset of interesting Spherical Waves that I’ve called “Platonic”, for being associated with the five regular convex polyhedra. Attracted by the need to investigate new forms of expression and beginning to fashion abstract sculptures using flexible plastic, iron and other materials, I’ve perceived a plausible connection between those figures and quantum wave-particles, such as electrons, and finally I’ve found a way to propose a reasonable description of their intrinsic and relational dynamic properties.

Furthermore, the above properties will be useful, in chapter XI, to provide an initial idea concerning the logic of how self-organization might work at certain levels of the physical world. In this respect, I will show how the elementary physical systems described in the two preceding chapters can be assembled to form a variety of compositions called “Harmonic Structures”, in which the movements of all elements are perfectly synchronized with one another. A rather simple idea of these structures can be offered by a vast range of illustrations and also by animated-computer videos.

In chapter XII, after a digression on the debate between Einstein and Bohr related to the question of whether or not nature obeys Bell’s Theorem, I will propose a theoretical experiment, in three different versions, which aims at confuting the Simple version of EPR and Bell’s Theorem elaborated in 1985 by David Mermin and also at revisiting Einstein’s philosophical position against the general conviction that QM is a complete theory and that probabilities are non-epistemic.

The advantage of this experiment, with respect to that proposed by Mermin, is due to the introduction of two innovative physical concepts. The first concerns the description of pairs of spherical loops which simulate electron-positron pairs in the spin singlet state and which provide a clear comprehension of the difference between a negative and a positive charge. The second concept concerns the intrinsic angular momentum of both these particles, each spinning around a number n of axes with n≥3. When the electron (and/or the positron) interacts with the inhomogeneous magnetic field of a Stern-Gerlach apparatus, only one of its axes called “DSA” (abbreviation of Dominant Spin Axis) will play an exclusive role in the measuring process.

The above experiment violates the so called (by Mermin) “Baby Bell’s inequality” and could be submitted to a test, even though not yet achievable with our actual technology. Furthermore, the introduction of the above innovative concepts (which can be clearly visualized) will offer an understandable alternative to the counter-intuitive notion of quantum states superposition (particularly in cases of incompatible measurements of spin). This chapter is concluding with a rational explanation of results obtainable in all Stern-Gerlach experiments.

Supposing that one of the versions of the above experiment were confirmed and/or supposing that the properties of one of my spherical loops were accepted by the scientific community as a model of the electron, since being much more reasonable than the actual point-like model, then the principles on which QM are founded would have to be reviewed and the irrational notion of “quantum states superposition” removed in favour of the classical concept of “quantum states mixture”, as clearly comprehensible in my description.

In any case, what I mainly hope is that the new ideas proposed in this book may offer physicists an opportunity for reconsidering all theories based on notions which imply anomalies and paradoxes, most likely responsible, as I think, for the long lasting stagnation of the current scientific paradigm.

I’m aware that scientists are generally reluctant to accept radical change in some of the principles on which mathematics, logic and physics theories are founded. As far as I know, they are rather accustomed to coexist with paradox and its metamorphic peculiarity, and are not interested in searching for its origin, maybe because considered nonexistent or impossible to reach (this argument has been widely discussed in chapter III). Therefore, I would like to stress my impression that the reason behind all the unsuccessful attempts to unify General Relativity and Quantum Mechanics may be attributed, not only to such an attitude of scientists, but mainly to lack of radically new ideas.